Why AI matters in New Zealand right now

What NZ organisations are seeing

AI has moved from talk to action. You see it in routine tasks first. Document summaries. Meeting notes. Faster responses to customers. Teams report quicker turnarounds and fewer repeat errors. Managers notice shorter queues and cleaner data. It is not magic. It is useful, and that is enough.

Common wins show up in three places. Frontline teams reduce handle time and raise first contact resolution. Back offices automate repetitive checks and form processing. Leaders get better visibility across content and decisions. You might already be using AI features inside tools you own.

The pattern is repeatable. Start small. Prove value. Expand to the next workflow. New Zealand organisations do this while staying pragmatic about cost and risk. That balance matters here. We are a smaller market. Budgets are tight. People want results that stick.

If you have not started yet, you are not late. Pick a simple use case with a clear measure, like time saved per ticket. Keep scope tight. You will learn fast and avoid surprises.

The real problems holding teams back

Most delays are not technical. They are human and process issues. People worry about privacy and mistakes. Leaders want proof before they spend. Data lives in scattered systems. Policies lag behind behaviour, so shadow tools creep in.

You can reduce friction by naming the common blockers upfront. Lack of a clear business case. Unclear ownership. No approval path for pilots. Weak guidance on what data to share. Thin training for everyday users. Each of these stalls momentum.

Fix the basics early. Write a one page policy that sets simple rules for safe use. Define one owner for each pilot. Decide what success looks like. Keep legal and security in the loop without slowing everything down. Offer short, hands on training. People learn by doing.

Expect bumps. Models will get things wrong. Colleagues will worry about change. That is normal. Treat pilots as learning time. Close what does not work. Scale what does.

What “good” looks like in year one

Year one is about learning and results, not grand plans. A good programme brings three small wins to production and retires one idea that did not deliver. It sets guardrails, measures outcomes, and builds team confidence.

Aim for a simple rhythm. Quarter one proves a fast win with clear numbers. Quarter two expands to a second workflow with new stakeholders. Quarter three hardens the controls. Quarter four tidies up processes and training. Keep the roadmap flexible.

A healthy setup shows a few signals. People know where to ask questions. Risk owners have visibility without blocking experiments. IT can deploy updates and roll back if needed. Finance sees a link between the work and saved time or better conversions.

Avoid over engineering. You do not need a full platform strategy on day one. Start where value is clear and data is available. Document what you learn. That knowledge will guide your next decisions.

What the NZ data says

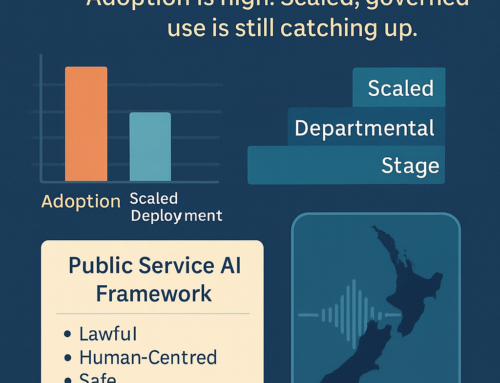

Adoption and impact highlights from recent NZ studies

Across New Zealand, adoption keeps rising. Most organisations have tried at least one AI feature in mainstream tools. A growing share report tangible benefits. Faster document handling. Quicker customer replies. Better search over internal knowledge. These are modest shifts that add up across a year.

The pattern varies by sector. Professional services and tech move earlier. Government and health move with more care. Small and mid sized firms adopt when tools fold into what they already use. That means email, documents, and ticketing systems. People prefer gains that feel immediate.

Reported impact clusters around time saving. Teams finish the same work in fewer minutes. Accuracy improves where quality checks exist. Where checks are missing, rework appears. That tells you the real lesson. Pair AI with simple controls and you keep the gains.

If you want benchmarks, look for New Zealand specific surveys and vendor usage overviews. Treat global stats as context only. Local constraints, from budget to data policy, shape outcomes here.

The top barriers to success

Barriers repeat across organisations of all sizes. Data quality is the big one. If your source content is messy, AI will echo that mess. Governance is next. Without guardrails, people do risky things by accident. Cost and skills follow. Leaders need a case they can trust. Staff need time and practice.

There are softer barriers too. Fear of change. Worries about job security. Mixed messages about what is allowed. Unclear ownership. These slow progress more than most roadblocks.

Work through them with a simple plan. Clean one dataset that is central to the first use case. Publish a policy that sets rules in plain English. Pilot with a small group and train them well. Measure time saved, error rates, and customer feedback. Share results widely.

Finally, keep compliance in view. Privacy, security, and sector rules are part of day one. Treat them as design inputs, not reasons to stop. You will move faster when risk owners help shape the pilot.

What this means for your plans

It means you can move now, with care. Pick a use case tied to a clear outcome. Keep scope narrow. Anchor the plan to data you can access and trust. Involve legal and security early. Document everything you decide.

Plan for three things in parallel. Value, safety, and learning. Value means a measurable result the business will care about. Safety means guardrails and a fallback if something goes wrong. Learning means you capture what worked and what did not. That learning becomes your playbook.

Expect to revisit choices. Vendors change fast. Policies evolve. The data you need may live in other teams. Give yourself room to adjust without derailing progress.

Most of all, keep it practical. Talk about minutes saved, fewer errors, and happier customers. These outcomes win support across teams. Big claims invite debate. Small, proven wins build momentum.

Pick your first use case this quarter

Fast wins for SMEs

Start where value and simplicity meet. Three patterns deliver early. A customer service co pilot that drafts replies and suggests next steps. Document processing that classifies, extracts, and files forms. Knowledge search that answers staff questions from your policies.

Why these. They sit on top of tools you already use. Email. Helpdesk. Document stores. The data is yours and close at hand. The risks are manageable with basic guardrails.

How to run them. Define the goal. For example, reduce average handle time by ten percent. Select a small team. Connect only the data they need. Write clear prompts and response templates. Add spot checks. Track time saved, accuracy, and customer impact.

What to avoid. Do not attempt a brand new platform in week one. Do not connect sensitive data without an approval path. Do not skip training. A one hour workshop with live tasks does more than a long manual.

Ship a pilot, learn, and decide whether to scale.

Sector snapshots

Every sector has low friction starts. In health, think triage notes and referral summaries. Keep patient privacy at the centre and involve clinical governance. In finance, use AI to speed up KYC checks and triage inbound queries. Align with compliance from day one. In agriculture, apply models to imagery and weather data for yield or risk insights. Keep farmers in the loop so outputs fit real workflows.

In education, support teachers with marking aids and admin helpers. Keep student data controls tight and transparent. In the public sector, focus on document automation and policy Q and A for staff. Follow existing algorithm and transparency commitments.

Across all sectors, pick use cases that do not need new data collection. Use what you already hold, with consent and purpose limits respected. Start small, report outcomes, and share learnings across teams. Sector rules shape pace, but the playbook remains the same.

Data foundations you need before you start

Ten point readiness checklist

You move faster when data basics are clear. Use this shortlist.

- Name the business problem and measure that matters.

- List the source systems and owners.

- Confirm consent and purpose for each dataset.

- Check data quality on a small sample.

- Remove or mask sensitive fields you do not need.

- Decide who can access what, and how approvals work.

- Set logging and retention for prompts and outputs.

- Define accuracy checks and human review.

- Plan rollback if the pilot misbehaves.

- Write a short policy people can read in five minutes.

Summary table: Data readiness at a glance

| Item | Owner | Tool / Artefact | Sign-off |

|---|---|---|---|

| Problem and metric named | Business owner | One-page brief | Sponsor |

| Source systems listed | Data owner | System inventory | IT / app owner |

| Consent and purpose checked | Privacy lead | PIA / DPIA note | Privacy |

| Sample quality checked | Data owner | Sample report | Project lead |

| Sensitive fields masked | Security | DLP / redaction plan | Security |

| Access rules set | IT / Security | Access matrix | Security |

| Logging and retention set | IT | Logging plan | Security |

| Human review defined | Process owner | QA checklist | Process owner |

| Rollback plan ready | Tech lead | Runbook | Tech lead |

| Policy published | Sponsor | One-page policy | Sponsor |

Keep it light. You can refine later. The goal is enough clarity to start a safe pilot, not a perfect data warehouse.

Where your data lives and who owns it

Data location and ownership affect trust. If you work with New Zealand customers, you may prefer NZ or nearby cloud regions for lower latency and clearer procurement paths. Check where models run and where prompts and outputs are stored. Clarify whether they are used for training by default. Choose settings that match your risk appetite.

Ownership is about more than storage. It includes rights to use, share, and delete. Map these for each dataset. Confirm that vendors cannot reuse your content without permission. Ensure you can export your data if you change platforms. Document these choices so procurement and privacy teams are comfortable.

Ownership inside your organisation matters too. Name a data owner for the pilot. Give them the right to stop or pause the project if controls slip. Clear ownership prevents ambiguity when timelines get tight.

Guardrails for shadow AI

People will experiment on their own. That is positive energy, but it can create risk. Shadow AI appears when staff paste sensitive content into unapproved tools. Stop it with clarity, not fear.

Give people a safe default. Approve one or two tools and explain what is allowed. Forbid copying private or customer data into public services. Offer a channel to ask for new tools. Reward teams that propose useful ideas.

Add light monitoring where appropriate. Watch for data moving to unknown places. Pair that with training. Short reminders in team meetings work. Share examples of safe prompts. Make it easy to do the right thing.

Most of all, respond fast when issues appear. Fix the gap. Update the policy. Thank the person who raised it. A supportive culture cuts risk and keeps momentum.

Safe and responsible AI in NZ

Privacy Act 2020 and the OPC

The Privacy Act sets the rules for collecting, using, and sharing personal information in New Zealand. It asks you to be clear about purpose, to collect only what you need, to keep it safe, and to give people access to their own information. AI pilots are no exception.

Map your pilot to the information privacy principles. List the data involved. Confirm consent or another legal basis. Limit access to the smallest group that can do the work. Secure storage and logging. Plan what you will do if a breach occurs.

The Office of the Privacy Commissioner provides guidance and training. Use those resources. If in doubt, ask questions early. Document decisions. Small teams often miss this step and regret it later. A few pages of notes now will save you time.

Publish a short notice for staff and customers if your pilot touches their data. Plain English earns trust.

Maori data sovereignty and the CARE principles

Data about Maori has context, history, and rights attached. Maori data sovereignty means communities should have control and benefit when their data is used. The CARE principles give you a way to act on that idea. Collective benefit. Authority to control. Responsibility. Ethics.

If your pilot involves Maori data, engage early. Ask who should be involved and how decisions will be made. Agree on purpose. Set rules for access, storage, and benefit. Share outcomes in ways that serve the community. This is practical work, not a box to tick.

Even if your first pilot does not include Maori data, build the habit now. Put the principles in your policy. Teach your teams what they mean. The goal is respect and partnership. People notice when you do this well.

A one page AI policy and approval flow

Do not wait for a perfect policy. Write one page that covers scope, allowed uses, banned uses, data rules, review steps, and contacts. Keep it in plain English. Make it easy to find. Update it as you learn.

Set a simple approval flow. One sponsor. One risk reviewer. One technical owner. A short form that asks for the problem, the data, the controls, and the success measure. Approvals in days, not months. That speed matters when momentum is fragile.

Include a stop rule. If the pilot cannot meet a safety or privacy requirement, it pauses. No debate. Clear rules protect people and the programme.

Finish with a training note. New users must take a short session before they get access. Skills and safety go together.

Mapping to recognised frameworks

You do not need to reinvent controls. Use known frameworks to guide your design. Map your pilot to a simple subset first. Risk identification, measurement, monitoring, and incident response. Keep the language familiar to your security team.

Look at widely referenced options. A risk management framework that offers practical controls for AI systems. A management standard that helps you operationalise policies and audits. A cross border law that will shape exporters and vendors. You will not adopt them in full on day one. You can still align your basics.

Make a table that links your controls to the framework items you chose. This shows auditors and partners that you are serious. It also helps new team members understand why controls exist. Start small and deepen over time.

Build, buy, or partner in Aotearoa

Platform choices in plain English

Choose the path that fits your stack and team. If you live in Microsoft 365, a built in copilot can be a natural first step. If you prefer Google Workspace, look at models that integrate with Docs and Drive. If you need flexible building blocks, API based services make sense. For teams on AWS or Azure, managed foundation model services keep security and logging inside your cloud.

Think about your data. Where does it sit today. What needs to connect. What guardrails do you require. Consider developer capacity. Do you have engineers to build and maintain a custom workflow, or do you want a managed app.

Run a small bake off. Define the same use case. Score tools on fit, effort, control, and price. Include a proof of value period. Avoid long commitments until you see results.

NZ cloud regions and data residency

Some organisations prefer New Zealand or nearby regions for latency and procurement reasons. Others care about where prompts and outputs are stored. Ask vendors to document data paths. Where does the request travel. Where are logs kept. Can you opt out of training. Can you bring your own keys.

For public sector and regulated teams, data residency can be a hard requirement. For others, it is a choice weighed against features and cost. Be explicit. Write down your minimums. Include them in vendor reviews and contracts.

Test performance from your locations. Include mobile networks if your frontline teams work on the go. A smooth experience drives adoption more than you think.

Partner selection checklist

A good partner reduces risk and speeds learning. Look for New Zealand case experience, not just vendor badges. Ask for two recent clients you can call. Check whether they can work within your procurement and privacy needs.

Assess four things. Sector knowledge. Technical depth in your stack. Change and training capability. A clear view on responsible use. Ask them to outline a small, fixed price pilot with deliverables and exit criteria.

Watch how they communicate. Do they explain trade offs plainly. Do they push for data you do not need. Do they offer a path to handover, or lock you in. A short checklist up front avoids poor fits later.

Consider a local partner: Net Branding Limited can run a small, fixed price pilot with clear deliverables and exit criteria. We focus on safe adoption, NZ data needs, and practical training so your team sees value quickly.

ROI, costs, and KPIs

Time saved and value gained calculator

Keep ROI simple and honest. Start with time saved. Measure a baseline across a real week. Then run the pilot and measure the same tasks again. Multiply minutes saved by the number of tasks and the number of people who do them. That gives you hours returned to the business.

Translate hours into value. Some teams redeploy time to higher value work. Others reduce overtime or backlog. Be clear about which applies to you. Do not over claim.

Add quality signals. Fewer errors. Faster resolution. Better customer satisfaction. These are leading indicators that support the time story. Present the numbers in a small table that leaders can scan.

Finally, include costs on the same page. Licences, integration, and change. Show the net effect. Decision makers respect a balanced view.

Summary table: ROI quick view (example)

| Metric | Baseline | Pilot | Delta |

|---|---|---|---|

| Average minutes per task | 6.0 | 4.5 | -1.5 |

| Tasks per week | 1,200 | 1,200 | n/a |

| People doing task | 8 | 8 | n/a |

| Hours saved per week | n/a | n/a | 30.0 |

| Estimated value per hour | n/a | n/a | Enter rate |

| Value per week | n/a | n/a | Hours × rate |

Use your real baselines. Keep the calculator simple and auditable.

Typical cost components

Costs fall into three buckets.

- Licences : Monthly or annual fees for models or apps. Prices vary by volume and features. Plan for growth once the pilot succeeds.

- Integration : Time to connect systems and data. Even light connections need design and testing. Budget for security reviews and approvals.

- Change and training : People need support to use new tools well. Short sessions, job aids, and floor walking help adoption. This cost is easy to skip and then regret. Keep a modest budget for it.

Put these in one view with a range for each. Leaders want to see the shape of spend before they commit.

How to track success without gaming the numbers

Pick a few metrics that matter and make them hard to game. Time to complete a task measured by the system, not self reported. Error rates checked by a reviewer. Customer satisfaction from your existing survey. Adoption rates based on real usage, not logins.

Show baselines and deltas side by side. Report weekly in the pilot. Share results with the team. Let them see progress and raise issues early. Celebrate small wins without overselling.

Avoid vanity metrics. Prompt counts or word counts do not help. Focus on things your leadership cares about. Fewer minutes per task. Fewer escalations. Faster onboarding for new staff. Solid measures build trust in the programme.

Your 90 day plan

Weeks 1 to 2: align, discover, decide

Start with a quick alignment session. Name the business problem. Confirm the metric. Pick the first use case. Identify the data and the owner. Choose a small team of users who will try the pilot.

Do a light discovery. Map the current workflow. Capture bottlenecks. Sample the data for quality. Decide on the tool or vendor shortlist. Draft the one page policy and the approval form. Share it with legal and security for fast feedback.

Finish with a simple plan. Tasks, owners, dates. Keep scope tight so you can deliver inside the ninety days. Book the training slot now. People are busy. Early calendar invites keep things moving.

Weeks 3 to 6: pilot with guardrails

Build the pilot in a safe environment. Connect only the data you need. Set logging and access controls. Write prompt templates and review rules. Train the users for an hour with live tasks. Keep the tone practical.

Run the pilot on real work for two to three weeks. Track the chosen metrics each day. Hold short stand ups to collect feedback. Fix small issues fast. Park bigger changes for a second phase.

Keep stakeholders engaged. Share a weekly dashboard with results and notes. Be candid about misses. This is how you build trust.

Weeks 7 to 12: measure, scale, or stop

Spend a week on measurement. Compare against the baseline. Gather user feedback. Capture lessons learned. Decide if the pilot met the bar for value and safety.

If it worked, plan a controlled scale up. Expand to the next team or workflow. Update training and policy based on what you learned. Secure a budget that matches the new scope. If it did not work, close it with a short write up. Thank the team. Pick a new use case using your fresh insight.

Either path is progress. The goal is a repeatable way to test ideas and move good ones into production.

FAQs: AI in New Zealand

Sources and further reading

NZ strategy and guidance

Start with public guidance on responsible use and the national direction. These sources set the tone for safe adoption in New Zealand. Look for pages that cover frameworks, surveys, and the role of agencies. They help you align language and policy so approvals move smoothly.

Bookmark the key documents your team will reuse. Policy templates. Algorithm commitments. Cross agency survey summaries. Link them from your internal wiki so people can find them easily. Review the links every quarter and refresh the list.

Industry reports and local case material

Use local reports for adoption patterns and practical lessons. Focus on New Zealand data where possible. Global numbers can inspire but do not always map to our context. Case material from domestic pilots is even better. Short, honest write ups of what worked and what did not will save you time.

Collect examples across sectors. Health, finance, agriculture, education, and public service. Aim for a range of sizes. Small firms and large organisations face different constraints. A shared folder of case notes becomes a valuable resource.

Security and privacy contacts

Keep a short list of contacts your team can reach when questions arise. Privacy leads. Security advisers. Vendor support channels. Sector bodies that publish alerts. Make the list visible in your wiki and pilot documents.

Agree response times. If a user spots a possible breach or misuse, they need a fast path to the right person. Run a tabletop exercise once a year to test the process. You will learn where to tighten things before a real incident forces your hand.